For the last year I have been working on my final project for my Masters Degree in Computer Science. My college, the Academic College of Tel-Aviv-Yaffo, doesn’t employ a Thesis but uses a combination of a Final test (with the material of core subjects from both the Bachelor and the M.Sc. degrees) and a final project worked on with one of the Doctors/Professors in my college. The project I am working on is on semantic similarities with Professor Gideon Dror and I am nearly done, all that is left is to present my work in front of my professor and a faculty member.

I have decided to first present my work here and then actually do the presentation.

The project was done mostly in Python (which before hand I had no knowledge of) and it’s first part was done as a possible contribution to the NLTK library.

The first part of the project was about implementing methods to find semantic similar words using an input triplets of Context Relation. Context Relation triplet are two words and their relation to each other extracted from a sentence.It was shocking to find in the end that NLTK hasn’t implemented a way to extract Context Relations from a text (they have a few demos done by human hand) and it seems that to implement this a knowledge linguistics that I just don’t posses.

The second part of the project was to extract the Semantic Similarities of words from the web site Yahoo Answers. The idea is that with enough data extracted from different categories an algorithm can be used to determine the distance of the words.

On to the presentation:

For this discussion we will ignore the part “without being identical”. In this project identical is included in similar.

Are Horse and Butterfly similar? The first response should be of course NO, but of course it depends comparing horse to butterfly to house reveals that horse and butterfly are similar it just depends on the context…

Likewise comparing a horse to a zebra the response would be YES. But looking at a sentence such as:

The nomad has ridden the ____

and looking at horse, zebra and camel which is more similar in this context?

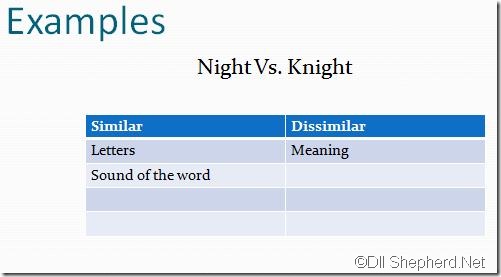

This time the only similarity in these words are the way they are written and pronounced. Their context relation should be very dissimilar no matter the text. But imagine using a naive algorithm that only counts the number of words in a text, is it really inconceivable to have a close number of occurrences of these words?

Humans use similarities to learn new things, a zebra is similar to a horse with stripes. But it is also used as a tool for our memory, in learning new names it helps to associate the name with the person by using something similar. For example to remember the name Olivia it could be useful to imagine that person with olive like eyes.

In software the search engine use similar words to get greater results, for example a few days ago I searched for a driver chair and one of the top results was a video of a driver seat.

Possible future uses for similar words could be in AI software. There is a yearly contest named the Loebner Prize for “computer whose responses were indistinguishable from a human's”. If we could teach a computer a baseline of sentences and then advance it by using similar words (like the learning of humans) it could theoretically be “indistinguishable from a human's”.

Imagine having the AI memorize chats, simply by extracting chats in Facebook or Twitter. Then have the AI extend those sentences with similar words. For example, in a real chat:

- Have you fed the dog?

Could be extended to:

- Have you fed the snake?

(some people do have pet snakes… and I can imagine a judge trying to trip an AI with this kind of question…)

A simple definition is if we had a sentence containing word and we replaced word with word’ and the sentence is still correct the words are Semantic Similar. From now on Similarity is actually Semantic Similarity.

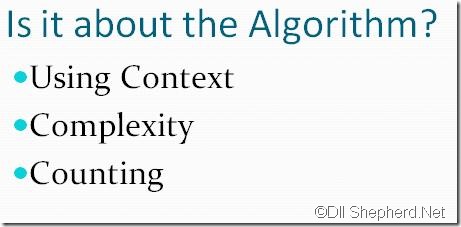

From the examples we can see that Similarity is all about Context, Algorithm and Text.

As we could see in the examples the Context of the words makes a large difference whether or not two words are similar. Unlike Algorithm and Text, it has nothing to do with the implementation of finding the similarity.

Some Algorithm use Context Relation to give value to the context in which the words are in. Extracting Context Relation from text is a very complicated task and has yet to have an implementation in NLTK, the library does have a couple of examples that were created by human means.

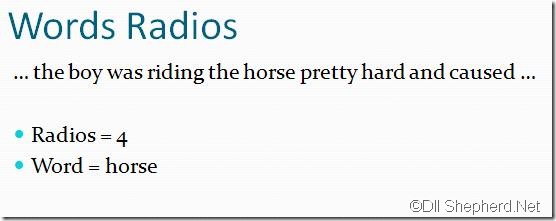

Looking at the all the words with the distance of 4 words from the word Horse. One of the Algorithms we will examine use this as a simpler Context aspect for the Algorithm.

Another form of Context extraction is separating the text based on category. Then each category adds a different Similarity value and those can be added together.

Algorithms that ignore the Context of the word are therefore less accurate than those that do but they are also more complex. It can be simply because they use Context Relation (with it’s complex extraction) or using a words radios which just mean individual work for each word – more complexity.

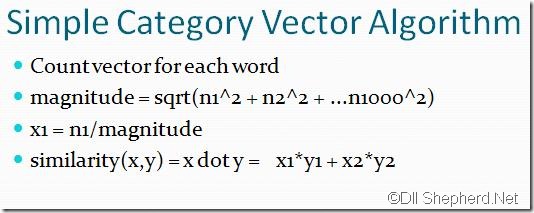

All the Algorithms use some form of counting mechanism to determine the Similarity/Distance between the words.

Depending on the Algorithm a different scoring is done for each word. The the Algorithm determines how to convert that score into the Distance between the words, which just means calculating the Similarity.

Text is a bit misplaced here because it is a part of the Context and is used inside the Algorithms. Choosing the right text therefore is as essential a part as choosing the right Algorithm.

But imagine a text that contain only the words:

This is my life. This is my life…

All the practical Algorithms shown here will tell you that “this” and “life” are Similar words – based on this text alone.

In my second implementation of Similarity Algorithms I used extracted text from several categories of Yahoo Answers. Yahoo Answers is a question+answer repository that contains thousands of questions and answers. For my Algorithms I had to extract 2GB of data from the site (just so I had enough starting data).

The Algorithms can be separated to two groups: those that use Context Relation (and therefore until an extractor for Context Relation is implemented are purely theoretical), and those that use Category Vector as a form of Context for the words.

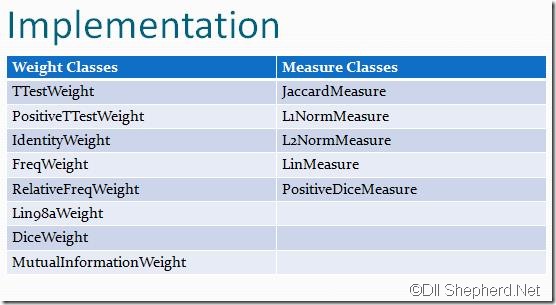

All the Context Relation Algorithms use this two inner classes: Weight and Measure. Weight is the inner class that give a score for the Context Relation, the Weight is important since a Context Relation that appears only once in a text should not have the same score as one that appeared ten times. The Measure inner class calculates the distance between two words using the Weight inner class. Using only this classes the user can be given a Similarity value of two words.

The Algorithms in this section implement a near-neighbor searches. We use them to find the K most similar words in the text not just how similar the words are.

Taken from James R. Curran (2004)-From Distributional to Semantic Similarity

In my Theoretical work I implemented some of the inner classes of Weight and Measure from James R. Curran paper From Distributional to Semantic Similarity.

I am not going to go into lengthy discussion on how they work because the paper discusses all of this.

I am going to say that the Similarities turn out different for each combination of Weight X Measure and that it is fairly easy to set a combination up or to implement a new Weight/Measure class.

The classes I choose to implement taken from Scaling Distributional Similarity to Large Corpora, James Gorman and James R. Curran (2006). This Classes are used to find the K most similar words to a given word.

The simplest algorithm is a brute force one. First we calculate the Distance Matrix between our word and all the rest of the words in the text and then we search for the K most Similar words.

The disadvantage for this algorithm is that calculation for finding the K-nearest words for “hello” can’t be reused for the word “goodbye” (actually only one calculation can be reused here and that is between “hello” and “goodbye”).

I am not going to go into the other implementations here since they are more complex. I might write another post in the future about those algorithms.

If you interested the Python implementation can be found here (or you can just read Scaling Distributional Similarity to Large Corpora).

There are two practical Algorithms that I have implemented.

This simple algorithm is very fast and can be preprocessed for even faster performance. By simple saving the count of each word per category, the Algorithm can be made as fast as reading the preprocessed file. In small examples of just 50MB data the Algorithm took only a few seconds to extract a result. Using the full data of 2GB it takes ~10 minutes to have a result for ~350 pairs of compare words. Though because of the large amount of data the data must be opened in chunks (a chunk per category) or an Out of Memory Exception is thrown.

The end of the Algorithm is identical to the first Algorithm but where the words radios Algorithm has clearly more vectors. Not only that preprocessing of this data is both time consuming (takes ~5 days) but also space consuming (from 2GB to 15GB) – just preprocessing the data caused at least 10 Out of Memory Exceptions (Python doesn’t have an automatic Garbage Collection so after every category I had to call gc.Collect() manually).

The calculation time for ~350 pairs of compare words was ~25 hours, which of course can’t be used in real time AI conversations. Though with the preprocessing it doesn’t matter if there are 350 or 35k words to compare – it will take approximately the same time. For example three categories of ~120MB with ~350 pairs take ~56 minutes but 3 pairs take ~30 minutes.

It’s important to note that both Algorithms have close result, for example bread,butter has a Similarity of 0.56 which is pretty high.

As can be seen the Basic has almost always greater result than Words Radios. Not only that Basic has some weird result such as Maradona is more Similar to football than Soccer though in many places (not USA) use them as synonyms, whereas Words Radios seem to think soccer is more similar to football.

Since the Words Radios actually uses a form of Context Relation (though not very lexical) it is considerably more accurate.

Remember I claimed it was all about the text? well this results were done with just a few categories and suddenly Arafat is similar to Jackson, how weird is that?

Another difference is the calculation time the Simple Algorithm takes ~17 seconds where the Words Radios Algorithm takes ~56 minutes.

BTW remember night and knight? Well the simple algorithm returned 0.79 Similarity for those 3 categories… And the Words Radios returned 0.48 Similarity.

So do you have any questions? Suggestion? Too long? Too short?

Tell me what you think…

Keywords: similarity, NLTK, search, AI

![Similar3[3] Similar3[3]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEixFuFnNuwM25XepI5zcr0Na0NjfdU-wShmZal8zqtIJnF5zQhsYAS6fky6w-jW6ZtNDOoRKWrGrE2c7swsDj3Z64_CJlDYYmTqh9SZbPcCSzXYNQMyyUasCzyu3y-4CC4A9q2Ln19N-cBS/?imgmax=800)